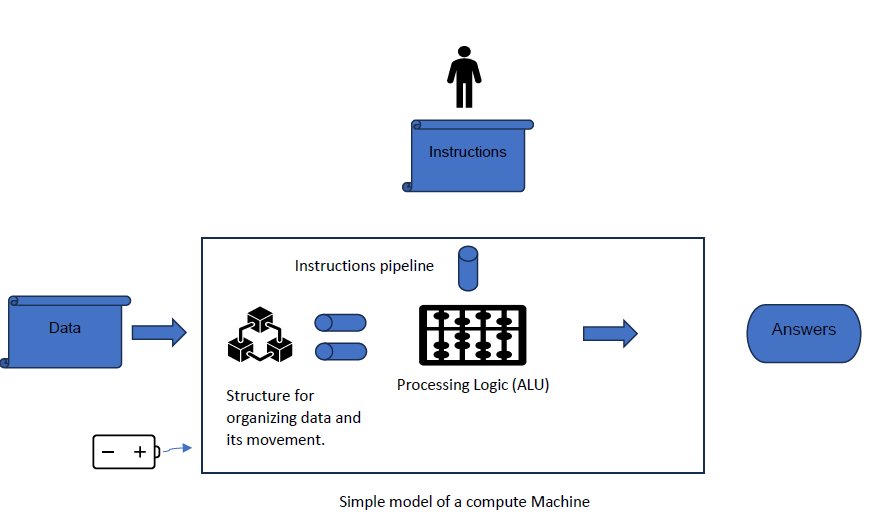

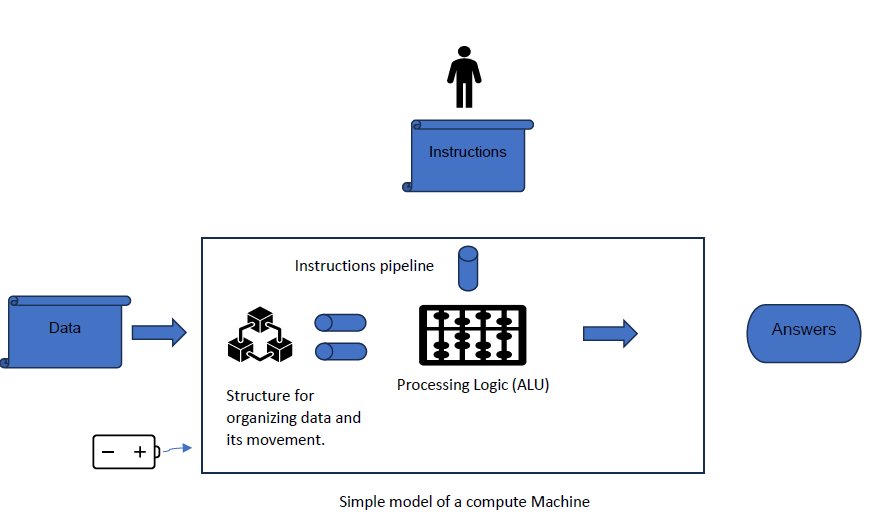

When I refer to a "compute machine," I'm talking about a specific hardware structure made up of digital building blocks. This includes a logic processing engine (ALUs), an assembly pipeline for managing data flow to and from these engines, algorithms for fetching instructions and data,memory, cache organization, extensive data movement networks (Network on Chip), power units,

and more. The choice of the optimal machine architecture depends on the size and type of data

payloads and instructions that need to be processed.

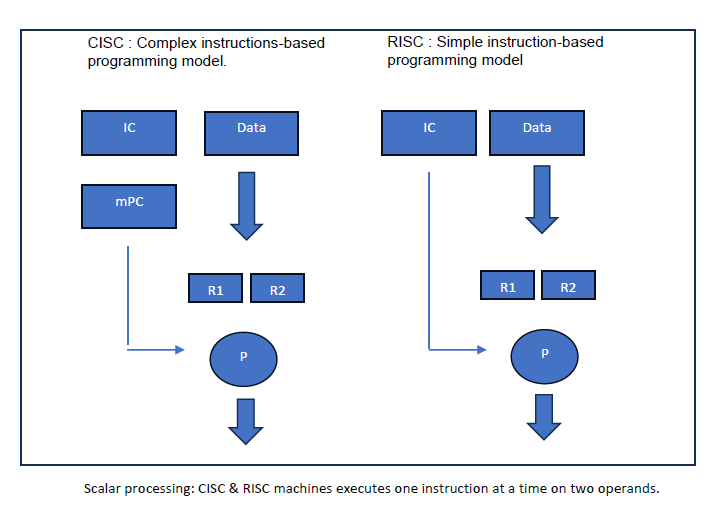

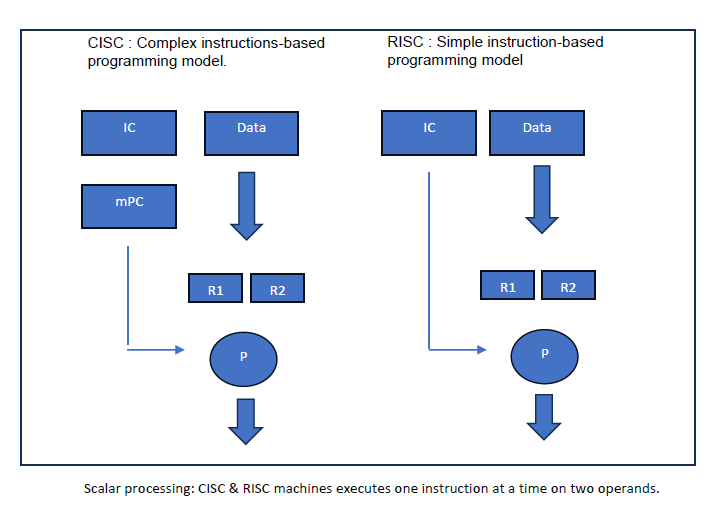

Over the past few decades, two primary architectures have profoundly influenced the computing

landscape: CISC (Complex Instruction Set Computer) and RISC (Reduced Instruction Set

Computer). These architectures differ in their support for type of instructions and the associated

pipelines used for processing. Fundamentally, both types execute instructions sequentially, each

typically handling one data element at a time—a process known as scalar processing.

Throughout their evolution, numerous variants of these fundamental architectures have emerged

and been adopted based on varying power and performance requirements. Examples are Mobile phones, embedded system processors, PCs, data servers etc. These architectures have effectively met the processing needs of data & programming models needed in the past four decades.

With AI applications, there is an emerging need for specific data structures and algorithm processing capabilities. The question arises whether CISC and RISC architectures are suitable for processing these requirements, or if different machines are necessary. I will delve into this topic in the next blog post.